The Algorithmic Beauty Standard: How AI Shapes What We Think Is Attractive in 2025

The Algorithmic Beauty Standard: How AI Shapes What We Think Is Attractive in 2025

Introduction

For most of human history, beauty standards were shaped by culture, art, fashion, magazines and celebrities. In 2025, our main beauty teacher is something else entirely: algorithms.

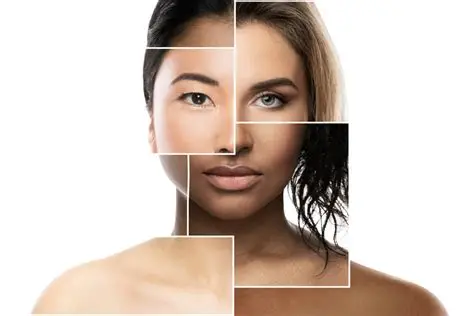

Beauty filters, AI-generated faces, recommendation systems and “smart” cameras are quietly creating a new, ultra-optimized version of attractiveness. Smooth skin, perfect symmetry, lighter skin tones in many regions, bigger eyes, smaller noses, fuller lips, to-the-millimeter proportions — all produced by machine learning models trained on oceans of images.

The result is what many researchers now call the algorithmic beauty standard: a set of invisible rules coded into software that decides which faces are promoted, which are edited, and which are quietly downgraded or erased.

This article explores how AI is reshaping the idea of “attractive”, why beauty is becoming increasingly uniform, who gets excluded by these systems, and how individuals can protect their mental health in a world where even their own face is compared to a synthetic ideal.

What Is the Algorithmic Beauty Standard?

The algorithmic beauty standard is the set of aesthetic preferences produced and enforced by AI systems: filters, recommendation models, facial recognition tools, editing apps and generative models.

It’s not officially written anywhere. But it exists in the way apps:

- smooth your skin automatically

- shrink your nose by default

- enlarge your eyes and lips

- lighten or “correct” your skin tone

- suggest certain jawlines, cheekbones and face shapes

- push specific types of faces to the top of feeds

These micro-adjustments, repeated billions of times, create a new norm: a face that almost looks human, but is actually closer to an AI composite of “what performs best”.

From “Instagram Face” to “Meta Face”

A few years ago, people talked about “Instagram Face”: a highly edited, hyper-feminine, sculpted look that dominated social media.

In 2025, the trend has evolved into what some observers call “meta face”:

- almost no pores or texture

- precise symmetry

- eyes slightly larger than average

- a narrow nose

- plump lips

- youthful but strangely timeless

This “meta face” is increasingly produced not by surgeons or makeup artists, but by AI beauty filters and generative models used in campaigns, beauty pageants and influencer branding.

How AI Learns What “Beauty” Looks Like

AI does not wake up one day and decide what is beautiful. It learns from data.

Most beauty-related AI systems are trained on:

- social media photos with high engagement

- images used in beauty and fashion campaigns

- celebrity faces and influencer content

- user-rated “attractiveness” datasets

These datasets are full of human bias: eurocentric features, slim bodies, young skin, able-bodied faces, often lighter skin tones, heteronormative beauty, and a narrow range of noses, hair textures and facial structures.

The Feedback Loop

Once the AI learns this biased version of beauty, it:

- creates filters that move users closer to that look

- promotes photos that match the learned template

- downranks or ignores images outside that template

Then people see which faces “perform” better online — and start editing themselves to match what the algorithm rewards. Over time, the feedback loop tightens:

- Biased data defines beauty for the AI.

- AI filters and feeds reinforce that beauty.

- People adapt to match the AI aesthetic.

- New data fed into AI becomes even more uniform.

The result is a self-fulfilling prophecy of beauty, generated by code.

Beauty Filters: Harmless Fun or Neurological Training?

Beauty filters are usually marketed as entertainment: “try this cute filter”, “smooth skin”, “subtle glam”, “AI makeover”.

But study after study is showing that repeated use of beauty filters:

- increases body dissatisfaction

- lowers self-esteem

- makes people prefer their filtered face to their real one

- raises the desire for cosmetic changes or surgery

Filter Dysmorphia

Therapists are now describing a phenomenon sometimes called “filter dysmorphia”: people look at their real face and feel that something is “wrong” — not because their face has changed, but because their brain has adjusted to the filtered version as the new baseline.

When the brain is exposed every day to:

- a thinner face

- lighter under-eyes

- smaller nose

- sharper jawline

…the unedited face starts to feel “incorrect”.

Beauty Filters and Younger Users

Especially worrying is the impact on children and teenagers, who may start using such filters before they have fully developed their own sense of identity and self-image.

Research-linked reporting shows that filters inspired by cosmetic surgery trends and “perfect skin” routines are contributing to rising anxiety, poor body image and mental health strain in girls and young people. [oai_citation:7‡The Times of India]

AI, Race and the Politics of the Digital Face

The algorithmic beauty standard is not only about symmetry and smoothness — it’s also about power.

Studies and investigative reports show that many filters:

- lighten skin tone

- narrow noses

- straighten or soften certain hair textures

- soften or erase features coded as “ethnic”

This quietly reinforces eurocentric beauty ideals — especially for Black, brown and other racialized users. [oai_citation:8‡The Guardian](https://www.theguardian.com/world/ng-interactive/2025/nov/13/black-youth-filters-mental-health?utm_source=chatgpt.com)

Racialized Harm

For Black adolescents and young adults in particular, research has found that racist online experiences, algorithmic bias and beauty filters that move faces toward whiteness are associated with increased anxiety, depression and lower well-being. [oai_citation:9‡The Guardian](https://www.theguardian.com/world/ng-interactive/2025/nov/13/black-youth-filters-mental-health?utm_source=chatgpt.com)

In other words, AI is not only distorting what “beautiful” looks like — it is also quietly reproducing old racial hierarchies in a new, glossy, digital form.

From Filters to Surgery: When AI Becomes the New Beauty Benchmark

One of the most disturbing shifts reported by surgeons and dermatologists around the world is this: clients are no longer bringing in photos of celebrities as references.

They are bringing in AI-edited versions of themselves — and asking to look like that “perfect” synthetic face. [oai_citation:10‡The Times of India](https://timesofindia.indiatimes.com/life-style/spotlight/ai-filters-redefining-beauty-standards-disturbing-trends-in-cosmetic-surgery/articleshow/123500152.cms?utm_source=chatgpt.com)

AI as the New Ideal

Surgeons report:

- patients wanting poreless, textureless skin

- nose reshaping that matches filter proportions

- jawlines and cheeks inspired by AI avatars

- requests for “subtle tweaks” that mimic digital enhancements

This is a shift from:

- “I want to look like this model” → to → “I want to look like my filtered self”.

Cosmetic Pressure and Mental Health

Research continues to link intensive beauty filter use and exposure to idealized digital faces with:

- higher self-criticism

- body dissatisfaction

- increased desire for cosmetic procedures

- worse self-esteem, especially among young women and girls [oai_citation:11‡ResearchGate](https://www.researchgate.net/publication/397739299_The_Impact_of_Social_Media_Filters_on_Body_Image_Dissatisfaction_Among_Young_Adults?utm_source=chatgpt.com)

The algorithmic beauty standard isn’t just aesthetic — it’s economic and psychological.

Recommendation Algorithms and Who Gets Called “Beautiful”

Beauty is not only edited — it’s also ranked.

Social platforms use recommendation systems that decide:

- which creators appear on the “For You” page

- which selfies are boosted

- which bodies and faces show up in trending content

If a certain type of face:

- gets more likes

- gets longer watch time

- generates more comments

…the algorithm learns to push it further — reinforcing that beauty type as the default.

Impact on Diversity

Research and critical essays on AR filters and AI beauty tools highlight that they often:

- reward faces closer to narrow, westernized ideals

- erase age, disability and visible difference

- flatten cultural variety into a single globalized aesthetic [oai_citation:12‡arXiv](https://arxiv.org/html/2506.19611v1?utm_source=chatgpt.com)

This creates the illusion that “most people look like this” — when in reality, most people do not.

How the Algorithmic Beauty Standard Affects the Mind

The human brain is plastic. It adapts to what it repeatedly sees.

When your daily visual diet consists of:

- AI-edited faces

- filtered influencers

- skin with no texture

- bodies with no natural variation

…your internal baseline for “normal” shifts.

Three Key Psychological Effects

1. Self-Objectification

People start to see their bodies more as objects to be optimized than as living, functional, complex selves. Studies on slimming and beautifying filters suggest they can increase self-objectification and anti-fat bias, as well as a stronger desire to change one’s body. [oai_citation:13‡ScienceDirect](https://www.sciencedirect.com/science/article/abs/pii/S074756322400387X?utm_source=chatgpt.com)

2. Constant Comparison

Social media already amplifies comparison. When those comparisons are to algorithmically perfected images, the gap between “me” and “them” feels even larger.

3. Dissociation From the Real Face

Many users report feeling disconnected from their unfiltered appearance — like their real face is the “before” and their online face is the “after”, even when no procedures have been done.

Is AI Only Making Things Worse?

Not necessarily. There is also a growing movement to use AI to challenge and diversify beauty norms.

Positive Uses of AI in Beauty

- generative models that showcase diverse skin tones, body types and facial features

- tools that deliberately avoid “slimming” or “whitening” by default

- brands using AI to audit their campaigns for lack of representation [oai_citation:14‡Maxx Report](https://blog.looksmaxxreport.com/ai-beauty-standard-analysis/?utm_source=chatgpt.com)

Some research also suggests that AI filters can be used in experimental environments to expose and reduce certain cognitive biases — for example, showing how much our perception of competence or trustworthiness is influenced by appearance, then teaching people to question those automatic judgments. [oai_citation:15‡elias-ai.eu](https://elias-ai.eu/news/the-impact-of-beauty-filters-on-perceptions-and-cognitive-biases/?utm_source=chatgpt.com)

How to Protect Your Mind From the Algorithmic Beauty Standard

We can’t fully escape AI — but we can reduce its power over how we see ourselves.

1. Audit Your Filter Use

Ask yourself honestly:

- How often do I use beauty filters?

- Do I still feel comfortable posting unfiltered photos?

- Do I feel “ugly” or “wrong” when I see my natural face?

If your answer is “often” or “no”, your brain may already be calibrating to the algorithmic standard.

2. Take No-Filter Days

Intentionally post or keep photos unedited — just for you, your close friends or your private gallery. This trains your mind to reconnect with reality and softens the shock between your on-screen and offline self.

3. Curate a Diverse Visual Feed

Follow creators with:

- different body types

- different ages

- different races and features

- visible disabilities or differences

The more variety your brain sees, the more it relaxes its grip on a narrow definition of beauty.

4. Learn to Recognize Edited and AI-Generated Content

Educate yourself on:

- common signs of filters (blurred skin, over-smooth lighting, warped backgrounds)

- AI tells (strange lighting, uncanny symmetry, ghost details in hair or jewelry)

The moment you can say “This is AI-edited” or “This is curated”, you weaken its psychological spell.

5. Talk Openly About It

With friends, siblings, kids, partners — name what is happening: “This isn’t real skin”, “That face is AI-generated”, “No one looks like this all the time.”

Silence protects the algorithmic standard. Conversation exposes it.

Conclusion: Beauty in the Age of the Algorithm

The algorithmic beauty standard is not an accident. It is a by-product of design choices:

- which faces are fed into models

- which filters are offered by default

- which images get boosted by recommendation systems

- which metrics companies optimize for: clicks, likes, time-watched

In 2025, beauty is no longer just cultural — it is computational.

But algorithms do not have the final word. Humans still decide what to build, what to reward and what to resist.

Reclaiming beauty means:

- seeing AI for what it is — a tool, not a truth

- choosing where we place our attention

- valuing texture, age, difference and reality

- remembering that no line of code can define our worth

The algorithmic beauty standard may influence how we see ourselves — but it does not get to decide who we are.

External Sources & References

- Hussain, B. (2025). How AI and social media are redefining aesthetic norms.

- Habib, A. (2022). Snapchat filters and shifting self-perception.

- Psychology Today – The hidden danger of online beauty filters.

- ELLIS Alicante – The Beautyverse project on AI and beauty norms.

- The Impact of Beauty Filters on Perceptions and Cognitive Biases (2024).

- Vogue Business – AI beauty pageants and the age of “meta face”.